I recently switched my LAMP virtual server to a different VPS provider.

The LAMP server that is serving you this site. So the migration worked!

Here are the steps, for future reference. Mostly for myself, but maybe you — someone who came here from Google — can use this too. This should work on any small to medium sized VPS.

Let’s go!

Lower your DNS records TTL value

When you switch to a new VPS, you will get a new IP address (probably two: IPv4 an IPv6). And you probably have one or more domain names, that point to that IP. Those records will have to be changed for a successful migration.

You need to prepare your DNS TTL.

To do this, set your DNS TTL to one minute (60 seconds), so when you make the switch, your DNS change will be propagated swiftly. Don’t change this right before the switch of course, it will have no effect. Change it at least 48 hours in advance of the change.

Set up a new VPS with the same OS

Don’t go from Ubuntu to Debian or vice-versa if you don’t want any headaches. Go from Debian 10 to Debian 10. Or CentOS 8 to CentOS 8. Or what have you.

This blog focusses on Debian.

Install all your packages: Apache, MySQL, PHP and what else you need.

My advice is to use the package configs! Do not try to to copy over package settings from the old server, except where it matters, more on that later.

This starts you fresh.

PHP

Just install PHP from package. Maybe if you have specific php.ini settings change those, otherwise you should be good to go. Most Debian packages are fine out of the box for a VPS.

I needed the following extra packages:

apt-get install php7.4-gd php7.4-imagick php7.4-mbstring php7.4-xml php7.4-bz2 php7.4-zip php7.4-curl php7.4-mysql php-twig

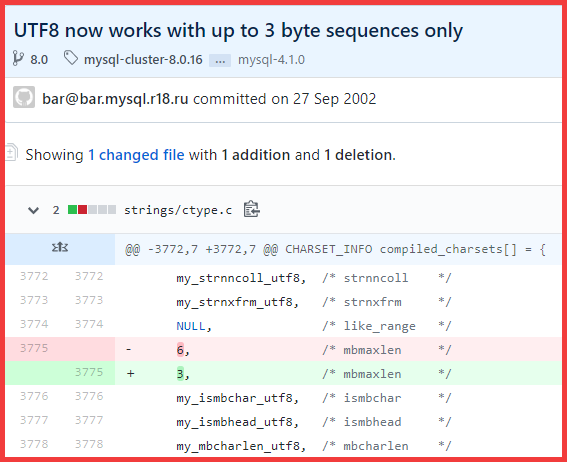

MySQL/MariaDB

apt-get install mariadb-server

Run this after a fresh MariaDB installation

/usr/bin/mysql_secure_installation

Now you have a clean (and somewhat secure) MariaDB server, with no databases (except the default ones).

On the old server you want to use the following tool to export MySQL/MariaDB user accounts and their privileges. Later we will will export and import all databases. But that is just data. This tool is the preferred way to deal with the export and import of user accounts:

pt-show-grants

This generates a bunch of GRANT queries that you can run on the new server. Run this on the new server (or clean them up first if you need to, delete old users etc.). So that after you import the databases, all the database user rights will be correct.

Set this on the old server, it helps for processing later.

SET GLOBAL innodb_fast_shutdown=0

Rsync all the things

This is probably the most time consuming step, my advice is to do it once to get a full initial backup, and once more right before the change to get the latest changes: which will be way faster. Rsync is the perfect tool for this, because it is smart enough to only sync changes.

Make sure the new server can connect via SSH (as root) to the old server: my advice is to deploy the SSH keys (you should know how this works, otherwise you have no business reading this post ;)).

With that in place you can run rsync without password prompts.

My rsync script looks like this, your files and locations may be different of course.

Some folders I rsync to where I want them (e.g. /var/log/apache) others I put them in a backup dir for reference and manual copying later (e.g. the complete /etc dir).

#Sync all necessary files. #Homedir skip .ssh directories! rsync -havzP --delete --stats --exclude '.ssh' root@139.162.180.162:/home/jan/ /home/jan/ #root home rsync -havzP --delete --stats --exclude '.ssh' root@139.162.180.162:/root/ /root/ #Critical files rsync -havzP --delete --stats root@139.162.180.162:/var/lib/prosody/ /home/backup/server.piks.nl/var/lib/prosody rsync -havzP --delete --stats root@139.162.180.162:/var/spool/cron/crontabs /home/backup/server.piks.nl/var/spool/cron/crontabs #I want my webserver logs rsync -havzP --delete --stats root@139.162.180.162:/var/log/apache2/ /var/log/apache2/ #Here are most of your config files. Put them somewhere safe for reference rsync -havzP --delete --stats root@139.162.180.162:/etc/ /home/backup/server.piks.nl/etc/ #Most important folder rsync -havzP --delete --stats root@139.162.180.162:/var/www/ /var/www/

You run this ON the new server and PULL in all relevant data FROM the old server.

The trick is to put this script NOT in /home/jan or /root or any of the other folders that you rsync because they get be overwritten by rsync.

Another trick is to NOT copy your .ssh directories. It is bad practice and can really mess things up, since rsync uses SSH to connect. Keep the old and new SSH accounts separated! Use different password and/or SSH keys for the old and the new server.

Apache

If you installed from package, Apache should be up and running already.

Extra modules I had to enable:

a2enmod rewrite socache_shmcb ssl authz_groupfile vhost_alias

These modules are not enabled by default, but I find most webservers need them.

Also on Debian Apache you have to edit charset.conf and uncomment the following line:

AddDefaultCharset UTF-8

After that you’re good to go and can just copy over your /etc/apache2/sites-available and /etc/apache2/sites-enabled directories from your rsynced folder and you should be good to go.

If you use certbot, no problem: just copy /etc/letsencrypt over to your new server (from the rsync dump). This will work. They’re just files.

But for certbot to run you need to install certbot of course AND finish the migration (change the DNS). Otherwise certbot renewals will fail.

Entering the point of no return

Everything so far was prelude. You now have (most of) your data, a working Apache config with PHP, and an empty database server.

Now the real migration starts.

When you have prepared everything as described here above, the actual migration (aka the following steps) should take no more than 10 minutes.

- Stop cron on the old server

You don’t want cron to start doing things in the middle of a migration.

- Stop most things — except SSH and MariaDB/MySQL server — on the old server

- Dump the database on the old server

The following one-liner dumps all relevant databases to a SINGLE SQL file (I like it that way):

time echo 'show databases;' | mysql -uroot -pPA$$WORD | grep -v Database| grep -v ^information_schema$ | grep -v ^mysql$ |grep -v ^performance_schema$| xargs mysqldump -uroot -pPA$$WORD --databases > all.sql

You run this right before the migration. After you have shut down everything on the old server (except the MariaDB server). This will dump all NON MariaDB specific databases (i.e. YOUR databases). The other tables: information_schema, performance_schema and mysql: don’t mess with those. The new installation has created those already for you.

If you want to try and export and import before migration, the following one-liner drops all databases again (except the default ones) so you can start fresh again. This can be handy. Of course DO NOT RUN THIS ON YOUR OLD SERVER. It will drop all databases. Be very, very careful with this one-liner.

mysql -uroot -pPA$$WORD -e "show databases" | grep -v Database | grep -v mysql| grep -v information_schema| gawk '{print "drop database " $1 ";select sleep(0.1);"}' | mysql -uroot -pPA$$WORD

- Run the rsync again

Rsync everything (including) your freshly dumped all.sql file. This rsync will be way faster, since only the changes since the last rsync will be synced. Next: import the dump in the new server

mysql -u root -p < /home/whereveryouhaveputhisfile/all.sql

You now have a working Apache server and a working MariaDB server with all your data.

Don’t even think about copying raw InnoDB files. You are in for a world of hurt. Dump to SQL and import. It’s the most clean solution.

- Enable new crontab

Either by copying the files from the old server or just copy paste the crontab -l contents.

- Change your DNS records!

After this: the migration is effectively complete!

Tail your access_logs to see incoming requests, and check the error log for missing things.

tail -f /var/log/apache2/*access.log

tail -f /var/log/apache2/*error.log

Exim

I also needed exim4 on my new server. That’s easy enough.

apt-get install exim4

cp /home/backup/server.piks.nl/etc/exim4/update-exim4.conf.conf /etc/exim4/update-exim4.conf.conf

Update: it turned out I had to do a little bit more than this.