I woke up Sunday morning with an unnerving feeling. A feeling something had changed. A disturbance in the force if you will.

Mainstream media seems blissfully unaware of what happened. Sure, here in the Netherlands we had a small but passionate demonstration on primetime TV, but e.g. the NY Times so far has *nothing* 🦗🦗

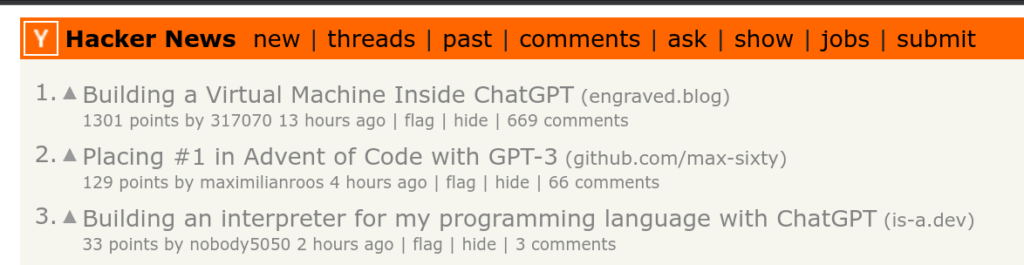

But something most definitely happened. My personal internet bubble has erupted the last few days with tweets and blogposts and it was the top story on every tech site I visit. I have never seen so many people’s minds blown at the same time. It has been called the end of the college paper as well as the end of Google. Things will never be the same. Or so they say.

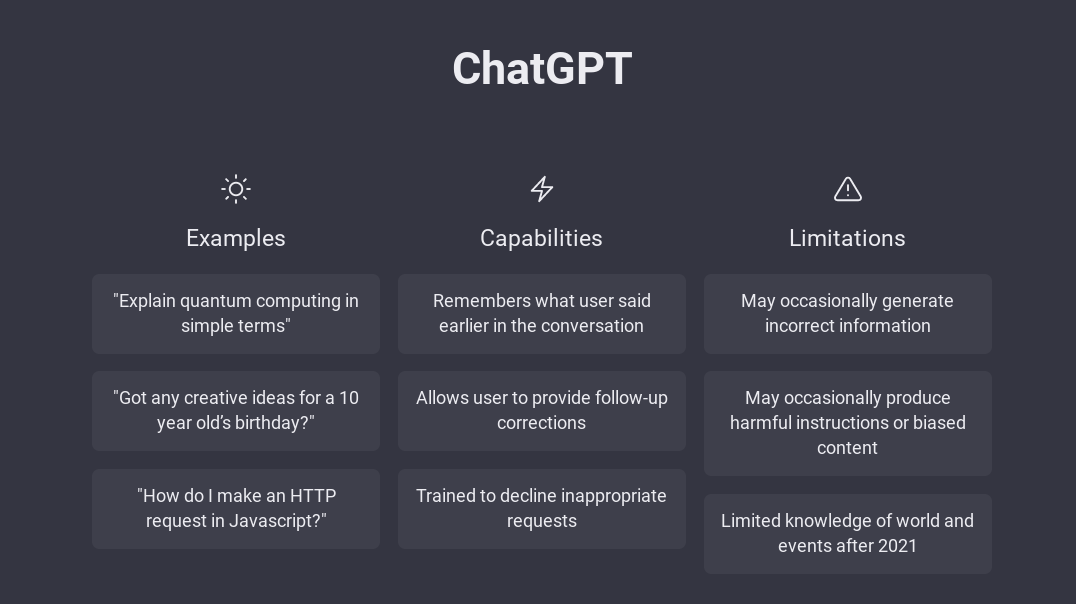

I am of course talking about ChatGPT by OpenAI it is based on GPT-3. It’s not AGI, but it’s definitely a glimpse of the future.

This post is to gather some thoughts and …. to test this thing.

When GPT-2 came out a few years ago it was impressive, but not in an earth shattering kind of way. Because you could still place it on a scale of linear progress. That this thing existed made sense. And it was mostly fun and also a bit quirky. People experimented with it, but the hype died down soon enough iirc. This was however the prelude.

GPT-3 was already lurking around the corner and promised to be better. Much better. How much? From what we can see now in the ChatGPT implementation the differences are vast. It is not even in the same ballpark. It is a gigantic leap forward. To justify the difference with GPT-2, GPT-3000 would be a better name than the current name.

GPT-3000

The impressiveness is twofold:

- The correctness. ChatGPT all feels very real, lifelike, usable or whatever you want to call it. The quality of output is off the charts. It will surpass any expectations you might have.

- The breadth. There seems to be no limit to what it can do. Prose, tests, chess, poetry, dances (not quite yet), business strategy analysis, generating code or finding errors in code or even running virtual machines (!?) and simulating a BBS. It can seemingly do anything that is text related; if you are creative enough to make it do something.

Sure it’s just ‘a machine learning system that has been trained to generate text and answer questions’. But this goes a long way (of course there are also critical voices).

And for the record: I was surprised by a lot of examples I saw floating online. Even though I shouldn’t have been.

Unlike any other technology that came before, GPT-3 announces loud and clear that the age of AGI is upon us. And here is the thing; I don’t know how to feel about it! Let alone try and imagine GPT-4. Because this is only the beginning.

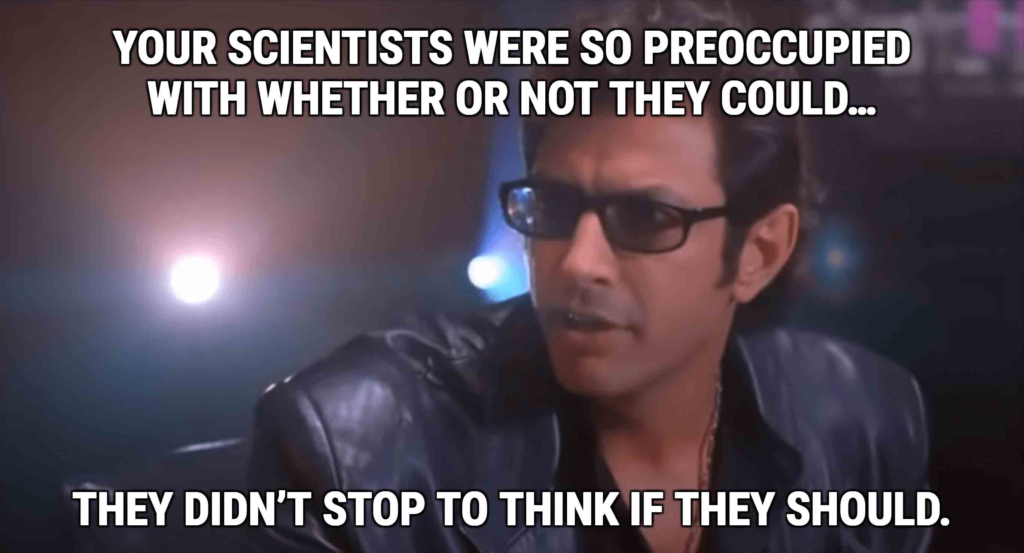

GPT-3 is proof that any technology that can be developed, will be developed. It shares this characteristic with every other technology. But there is another famous human technology it shares a specific characteristic with i.e. the atomic bomb. The characteristic being: just because we could, doesn’t always mean we should.

But alas, technology doesn’t wait for ethics debates to settle down.

And now it’s here, let’s deal with it.

First thoughts

I am no Luddite, though I am very much a believer that new things are not necessarily better, just because they’re new. But with GPT-3 I do feel like a Luddite because I can’t shake the feeling we are on the brink of losing something. And in some cases — within a matter of days — we have already lost something; which was ultimately inevitable and also happened extremely fast.

People have always been resistant or hesitant when new tools arrive. Take the first cameras — or decades after those, the digital cameras — instead of seeing possibilities, people initially felt threatened. Which is a very human reaction to new things that challenge the old way of doing things. It’s the well-known paradoxical relation we have with innovation of tools. Paradoxical, because in the end the new tools mostly always win. As they should. Tools are what makes us human, it’s what separates us from almost every other living being. Tools pave the way forward.

And this is just another tool. Right?

But here is the crux. Somehow this seems to be more than just a tool. The difference being that the defining characteristic of a tool is that it enhances human productivity and GPT-3 seems to hint at replacing human productivity.

Decades of hypothetically toying and wrestling with this very theme (i.e. can AI replace humans?) in sci-fi has all of a sudden become a very real topic for a lot of people.

No, I do not think it is sentient. But it can do an awful lot.

The future belongs to optimists

Let’s try and look at the arrival of GPT-3 from an optimistic perspective (yes, this could be a GPT-3 prompt). My optimistic approach is that GPT-3 (and AGI next) will force us to make us more human. Or even better: it will show us what it means to be human.

Because GPT-3 can do everything else and do it better (for arguments’ sake lets just assume that better is better and steer away from philosophical debate about what better even means).

GPT-3 will cause the bottom to fall out of mediocrity, leaving only the very best humans have to offer. Anything else can be generated.

So what is that very best that makes us human? What is it that we can do exclusively, that AGI can never do? What is so distinctly human that AGI can never replicate it?

One of the first things that came to mind for me was whether AGI could write something like Infinite Jest or Crime and Punishment. Literature. Earthshattering works of art that simultaneously define and enhance the human experience. Literature in my opinion is the prime example of the ultimate human experience. Could AGI … before even finishing this question: yes, yes it could.

Is that a bad thing?

What we’re seeing is the infinite monkey theorem in action. AGI can and will produce Shakespeare. The data is there. We have enough monkeys and typewriters.

As long as you feed it enough data it can do anything. But who feeds it data? Humans (for now). I am not ready to think what happens when AI starts to talk to AI (remember Her?). For now it feeds and learns from human input.

Maybe AGI is where we find out we are just prompt fodder and humans are not so special after all? Maybe that’s why I woke up with an unnerving feeling.

The proof is in the pudding

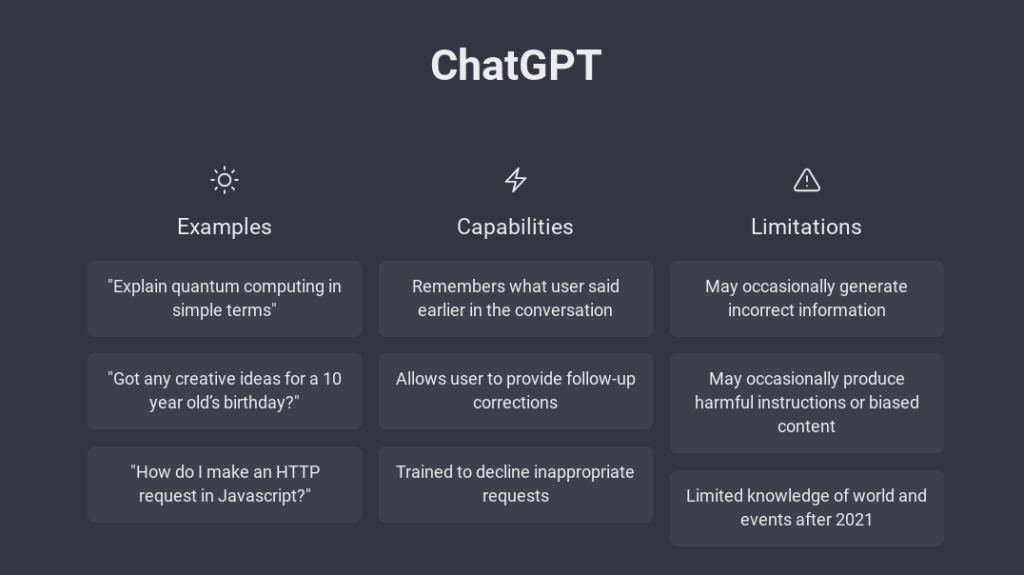

Or maybe, it is because ChatGPT could enhance everything I have written so far and make it more clear and concise.

Because it definitely can.

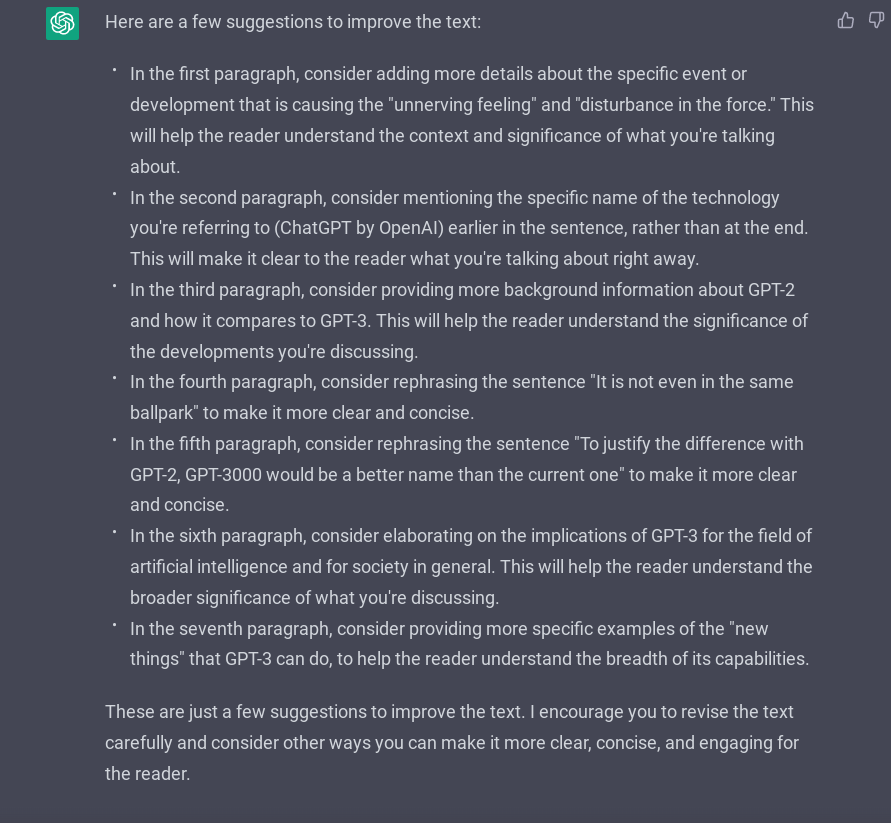

Of course I tried this and it gave the following suggestions.

First part

The blog post was too long so I had to cut it in two parts.

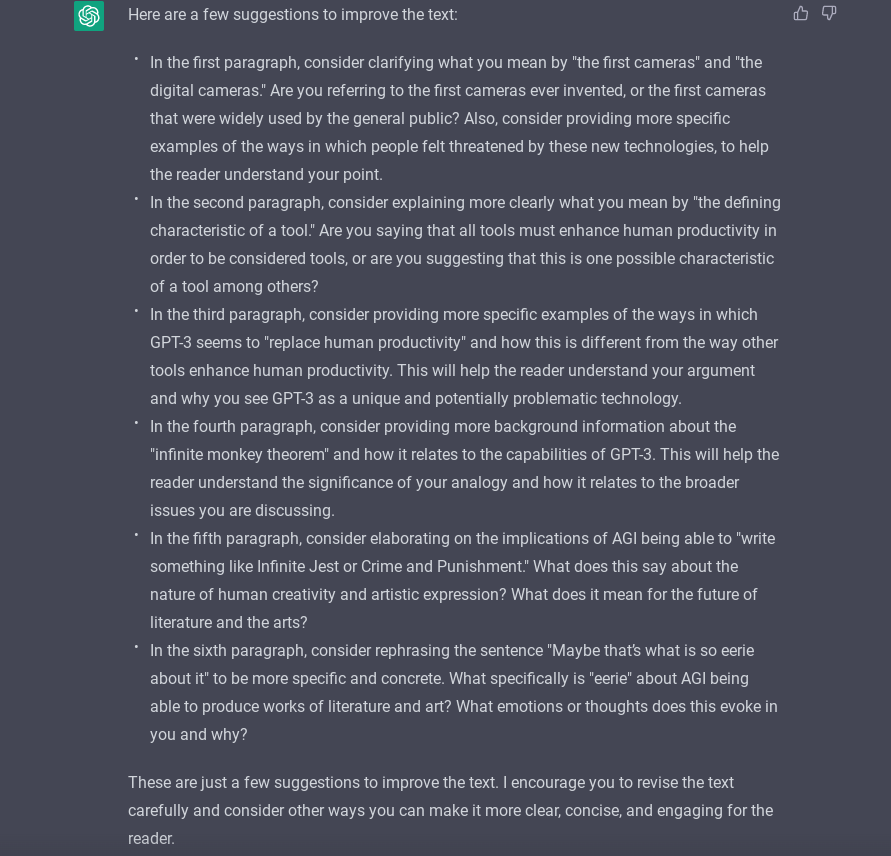

Second part

The first paragraph here is actually the eight etc.

I have chosen to keep the original text as-is with the screenshot suggestions so you can see the difference.

It is really good. What more can I say?